Large Language Models (LLM), especially the ones from the “generative” kind, have taken the world by storm. And, Retrieval-Augmented-Generation (RAG) has become very popular as the predominant technique for LLMs to answer questions based on our custom information.

I understand there are plenty of tools in the market today to help setup RAG in minutes, but before using the fancy tools that hide all the implementation details, you might want to understand what is really going on under the surface. And, there is no better way to learn how the wheels turn, than to write the code yourself. So, here is a step by step, with thorough explanations.

I will be using the OpenAI python library.

Requirements:

- Docker (or a python environment with langchain, openai, pandas, and numpy)

- OpenAI API access

- Interest in understanding how things work

If using the Docker route, you can use this Dockerfile:

Build and run it mounting the local folder where you will write your python code:

docker build . --tag rag

docker run --rm -it -v $(pwd):/src rag bashFrom here on, if you are using the Docker route, all the code writing should be done in the folder outside of Docker, and the running in the shell of the container, Visual Studio Code makes this very easy, but it can be done manually, too.

So, the first thing you will need is to setup your variables in the environment. Linux flavors:

export OPENAI_API_KEY="xxxx"Interacting with ChatGPT

Perfect, so now we will just chat with ChatGPT via python.

As you can see, writing code to chat with ChatGPT is extremely simple. But, at this point, ChatGPT is just answering from its own knowledge background (billions of training documents). In order for ChatGPT to use our own knowledge base to answer the questions we need to find a way to inject our data into that conversation. But it is not as easy. ChatGPT cannot be “fed” large amounts of data, so we need to find the most relevant documents ourselves, and provide them to ChatGPT as context to the question. And we would prefer to accomplish it without having to implement yet another LLM, nor having to re-train or fine-tune these models every time our documents change. Let me show you how to do just that.

Embedding vectors

In order to get ChatGPT to answer the question based on your content, first you need to understand a little bit about embeddings.

An embedding is a vector representation of the semantics of a string. It is what enables us to use mathematical concepts, tools and techniques to manipulate and compare strings. Let me show you an example:

The first thing you will notice is that the content of “results” is huge. It is a very large array of floating point numbers that represent the text. Those numbers are not an encrypted or codified version of the text, they represent the semantic meaning of the text in high-dimensional vectors.

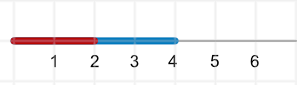

To attempt to understand these, let’s imagine that I want to represent people with numbers. I think I could do this easily with age. I can represent a 2 year old person with a number 2 and a 4 year old with a number 4. Since we only have one dimension, I could represent them as lines and it would look something like this:

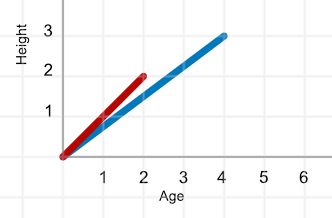

But then, if I would like to add a height component in feet, the representation would have to add a second dimension and would look like this:

The information now has two dimensions, and while we could just draw a point in the intersections of the the age and height, we are really more interested in seeing the vector (remember, magnitude and direction). In this case, the 2 year old is represented by a vector of two dimensions:

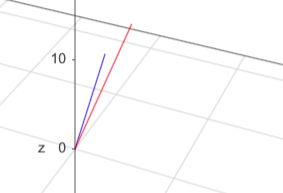

Things get a tad more complex if I add a third dimension: weight in kilograms. The vector now has a different value in each dimension, and the graph is now tridimensional:

Notice that direction for a two dimensional vector was represented by the angle of the line, but that is not the case beyond two dimensions. Adding a fourth dimension makes it very difficult to draw in a two dimensional system like our screens, and moves into a realm that is difficult to visualize, yet, the analysis of the description of these two individuals into four different dimensions that can be represented by numbers is what allows us to use existing algorithms and tools to manipulate this non-numeric objects.

Now, back to the results we got back from the request to create a vector embedding of our text, Large Language Models (LLM) identify thousands of dimensions that the text is evaluated against and return these large number of coordinates that describe the text in a high-dimensional vector. In the case of the text-embedding-ada-002 model, it returns a vector of 1,536 dimensions.

Also, notice that the vectors for both texts have the same amount of dimensions, the length of the text does not affect the amount of dimensions it has been evaluated against. That is key, because it will make comparison of the two vectors possible, they represent the text against the same dimensions.

Vector similarity

Now that we have successfully created embeddings, we need to figure out how they will help us accomplish AI tasks. First of all, let’s notice that what we have done is to create a representation of the semantic meaning of those texts using vectors. Second, vectors are mathematical concepts, and we have mathematical ways to deal with them. For example,

Notice that I compared two vectors of two dimensions, and that both have the same numbers, two and five, yet, the similarity between them is only 68%.

Let’s increase the dimensions:

The similarity is even lower, these two vectors are definitely going into different directions. Remember that even though we have two tens, two fives, etc., the comparison is by dimension, so the function is comparing 1 to 10 in the first dimension, 2 to 5 in the second, and so forth. The comparison by dimension finds these two vectors to be vastly different.

The point will be reinforced if we use totally different numbers in both vectors, but that keep similar direction and magnitude:

As you can see, these completely different sets of numbers are actually 98% similar because their direction and magnitude are almost identical.

Now let’s compare the values of the 2 and 4 year olds:

Wow, greater than 99% similar, what the cosine_similarity function is telling us is that these two vectors represent almost the same thing. If you think about it, both vectors represent a child at different moments of their life, thus the similarity.

What about comparing the 2 year old vector to that of an adult? Well, that proves to be very dissimilar:

These two individuals are so different that this superficial comparison over only four dimensions considers them to be very far apart.

Embedding similarity

Now that we have a better understading of how two vectors can be compared, let’s go back to the two vectors we got from OpenAI’s text-embedding-ada-002 and compare them:

When comparing embedding vectors for text similarity, even as dissimilar as these were, we will observe that, semantically, their similarity is usually above 70%, so a 72% is low.

So, let’s change the texts to some similar texts:

Ok, these two texts share three words and are only different in one, yet the similarity is only 86%.

How about two sentences that pretty much mean the same thing:

These sentences only share one word, yet they are considered 96% similar, because they basically mean the same thing.

If we pass two unrelated sentences:

As expected, slightly above 70%, but if we add some sort of connection between the two sentences:

The cosine similarity finds a stronger relation between the last two sentences because of the connection to the United States.

Also, it should be noted that, depending on the model, the semantic vectorization of text can also work across languages:

Some models work better than others across languages, these ones were done using the text-embedding-ada-002 model from OpenAI.

Searching for similar content

Excellent, now we know how to ask ChatGPT a question, we also know how to create vector embeddings of text, and we now understand how those vectors can be used with mathematical principles to compare their similarity.

Now we’ll get into the process of searching for similar content.

The process is simple, but let’s consider one more thing. Creating embeddings for a large list of documents is expensive in time and money, so we should find a way to create them only once, or at least only as often as the content changes. That said, the process should be pretty simple:

Prep

- go through the list of all of your documents

- get an embedding for each document

- save the embedding along with a pointer to the corresponding document

- persist this list (on a vector database, or a persisted Pandas dataframe)

On every question

- get an embedding for the question (or subject)

- go through the saved list of embeddings/documents

- compare the embedding of the document with the embedding of the question

- save the comparison score with the document

- Now, take all the documents and filter them based on some threshold (>75% for example)

- Sort the matching ones by the score

- Pick the top one… and the document will probably contain an answer to your question

For this exercise I won’t be using a vector database, although it is definitely the way to go for a production deployment, instead, I will just go the easy route, and use a pandas dataframe.

Let’s add pandas and numpy to our file:

import pandas as pdAnd let’s add

So, now we have a Dataframe with one column: texts.

Now we will move the creation of the embeddings into a function that we can call repeatedly. This is key at this point, since we are going to be adding a new column to the dataframe called embedding which will store the result of calling the get_embedding function on each text.

As you can see, each row in the dataframe now has the vector that represents the text.

Now, we need to get an embedding for the question:

question = "Is Peter a musician?"

question_embedding = get_embedding(question)And then add a new column to the dataset to store the similarity between the vector of the text and the vector of the question.

Great, so now our dataframe consists of rows with the original text, the vector of the text, and the similarity to the question vector.

We can probably just eyeball the list and pick the closest line, but let’s attempt to do it in code and see if it gets us a reasonable answer.

The next step is usually to filter the list so that for the next steps we deal with a smaller subset. We do this by filtering the dataframe by similarity greater than certain threshold, in this case we chose 80%.

We already see that the list got smaller, only 5 rows meet the criteria.

The next step is to sort the filtered rows in similarity order and pick the top match:

Excellent, using the embeddings we only needed plain mathematics to find the closest document with content that could be used to answer our question!

Finally, ask ChatGPT to answer a question based on our Context (RAG)

Now we have all the elements we need to finally get ChatGPT to use our context to answer the question. By inspecting the statement that math helped pick we can probably guess the answer already. But, in real world cases, this could be a very long document, besides, “Peter plays the clarinet” is not a direct answer to the question. For that we will use the Large Language Model ChatGPT that is not only capable of understanding the question and the context, but also to generate an appropriate response.

And now, the moment of truth, we will get the value of the top answer that we found matching the question and pass it to the chat call as context:

That is what RAG is all about. Augmenting the context for ChatGPT to answer a question by retrieving information using vectorized data.

Now, of course there is plenty more to create a production-level application using these technologies. The newer embedding models create larger vectors (higher accuracy, but slower search), but also allow for shortening them, which can be used to do a two-pass search by filtering the total set of documents with a less granular vector and then using the higher dimension vector for the smaller set. I have only used strings here, but the same principles would work for larger documents. The documents will need some processing, like cracking them open (extract text from pdfs, etc.), chunking (break the documents in smaller units, potentially overlapping the chunks to avoid chopping the flow), using a vector database, implement hybrid searches (keyword, semantic, etc.).

So, yes, there is plenty of other work to be done to take this application to production, but hopefully if you have read this far, you have a greater understanding of how RAG works.